How AI and Deep Learning Enhances Airport Operations

Many of us are concerned that Artificial Intelligence (AI) is going to essentially take over our world some day and that AI overlords will eventually be running our lives. While that dystopian future is frightening, the truth is that AI is an extremely useful tool, especially in completing tasks that are repetitive, mundane, dangerous, or difficult for humans to perform.

A great example of this is airport operations. While passenger volumes continue to climb steadily, it is increasingly difficult to staff airport positions due to shortages of available labor. In some cases, AI-enhanced automation solves real problems that were nearly impossible to solve reliably with traditional automation approaches.

There are several cases where AI and Deep Learning provides tremendous benefits with enhanced intelligence at the sensor level within airports, including:

Jet Bridge Autonomy

A Deep Learning-enabled sensor accurately feeds location data to the Jet Bridge Programmable Logic Controller (PLC), guiding the autonomous driving of the Jet Bridge to the passenger boarding door in record time.

Human Intrusion Detection

– A color 3D sensor with an on-board AI coprocessor is trained to detect humans to prevent them from passing through the security doors behind the ticket counters and some baggage claim areas, preventing accidental or unauthorized entry into the secure area.

Automatic Bag Hygiene and Baggage Classification

– Color 3D sensors and an AI-trained edge device examine baggage flow on-the-fly and determine not only what type of bag it is from 30+ classes but also if the item is considered conveyable or non-conveyable. Overall system throughput is improved due to less baggage jams.

We’ll discuss each of these applications in more detail in this article. But first, let’s spend a little time understanding AI and Deep Learning.

AI and Deep Learning

At the heart of AI is the concept of a neural network that mimics the way the human brain is structured. The human brain is comprised of an estimated 85 billion neurons and synapses defining the interconnections and interrelationships between these neurons store our longer-term memories. A memory is like a stored pathway between a large set of neurons and, when the pathway is reinforced through repeated activity, the memory lasts longer.

Much of today’s AI mimics the brain with large numbers of artificial neurons, which are basically binary functions where inactive neurons carry a weight of 0 and active neurons carry a weight of 1. These neurons are interconnected in a variety of pathways to imitate how the brain operates. Much like in a human brain, the neural network “learns” by adjusting the strength of the interconnecting synapse between two neurons, in this case by adapting the weight of the connections between the nodes in the artificial neural network. It is helpful to understand that there can be multiple layers to the AI (a deep neural network) and each type of neural network has unique characteristics and uses.

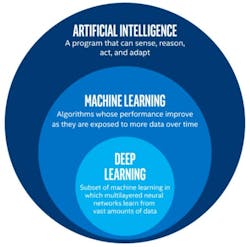

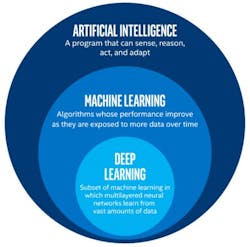

Application of Introduction Artificial Intelligence Machine Learning in Real Life.

Deep Learning – A subset of Machine Learning, uses large datasets to train large, interconnected neural networks

Machine Learning – A subset of AI, involves algorithms to learn from data and improve performance over time

Artificial Intelligence (AI) – Using machines to mimic human intelligence for problem solving and learning

For purposes of this discussion, we are going to focus on the Deep Learning subset of AI, as some of today’s sensors can accommodate the Deep Learning neural networks on-board for the fastest decision making.

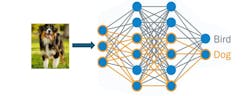

To illustrate how Deep Learning works, let’s create a trained neural network to sort pictures of dogs and birds into the appropriate classification. While this may seem like a trivialization of the technology, there are many applications where correctly identifying a picture or object using automation is incredibly useful but otherwise difficult. Attempting to do this classification with traditional rules-based machine vision would be pretty much impossible but is straightforward with AI. In this case, we’ll train the neural network with pictures that are appropriately labeled “dog” or “bird.”

Imagine that the labeled training pictures are chopped up into thousands of segments and through the training process, each neuron learns a certain aspect of the training image like the shape of a dog’s eye versus the shape of a bird’s eye. With large training

sets, Deep Learning neural networks acquire an uncanny ability to distinguish between multiple similar or dissimilar objects. An important step after the initial training is to send additional images through the neural network and have humans evaluate the output. Should the neural net misidentify certain images, the images are correctly annotated and then re-run through the training process whereby the neural network “learns” to correctly identify these images in the future.

Deep Learning neural networks are flexible enough to either classify various images into categories or to spot abnormalities in images for applications such as quality control. Training neural networks for sensor-based applications follows essentially the same process but the trained neural network is deployed directly onto the device where it can execute the analysis rapidly without having to rely on power-hungry server farms.

Now that we have a better understanding of how Deep Learning works, let’s look at some airport applications where Deep Learning solves previously unsolvable problems.

Jet Bridge Autonomy

The goal here is to either have the jet bridge dock to the aircraft quickly, efficiently and safely without human intervention or to provide assistance so the bridge docks quickly and accurately in a minimal amount of time. Rapid docking greatly reduces fuel burn and engine hours as the aircraft’s engines or Auxiliary Power Unit (APU) must provide electricity and air conditioning until the jet bridge ground power is connected. An additional benefit is getting passengers into the terminal more quickly.

Deep Learning provides the key enabling technology by localizing the aircraft’s passenger door and providing a stream of location information telegrams to the jet bridge’s Programmable Logic Controller (PLC). The color 3D sensor also provides useful distance information that is backstopped by an array of distance sensors. To achieve full autonomy, a very sophisticated controls system is required that uses a complete array of sensors to determine jet bridge extension/retraction, height, cab rotation angle, distance to aircraft, and outdoor-rated LiDAR safety scanners around the bogey wheels to detect humans or obstructions in the path of the jet bridge.

For this application, many sample images were obtained in various weather/lighting conditions and different aircraft liveries; areas such as the small round porthole, door handle and edging around the door itself were annotated.

After completion of training, the neural network was downloaded to the sensor and it became ready for use.

As part of the complete jet bridge autonomy package, the Deep-Learning-enhanced sensor ensures docking in approximately 45 seconds, considerably shorter than the 2-3 minutes most airlines assign to the docking process. As an added benefit, the autonomous jet bridge remains operational during thunderstorms when, normally, no personnel are allowed in the bridge. This allows passengers to deplane when they might otherwise be stuck in the aircraft until the storm passes through.

Human Intrusion Detection

Security at an airport is always a concern and there are several areas on the landside where it may be possible a security threat with bad intent, or a curious child may slip through a security door/portal on a ticket counter baggage conveyor or flat plate claim conveyor. Either event represents a security breach and, also, someone unfamiliar with baggage handling conveyor could be seriously injured by equipment in the system.

The trick here is to allow the flow of the variety of checked baggage to move through the portal opening while detecting when a person is trying to slip through the security door/portal, thereby creating an alarm condition. Besides posting a security guard to watch every portal at great expense, no real satisfactory solution has ever been implemented.

Now, the same color 3D sensor trained with a neural network with specific training to recognize humans versus objects performs the task quickly, accurately and inexpensively. The neural network training involved annotated images of people of different colors, sizes and shapes with a variety of clothing. Via parameterization, the sensor creates fields where humans are detected, and various alarm outputs (e.g. local output or Ethernet messages) are enabled. It is also possible to parameterize the neural network to detect objects in the field of view, so the sensor can also be used for collision detection on jet bridges and other forms of Ground Service Equipment (GSE).

Bag Classification/Hygiene Detection

While Baggage Handling Systems (BHS) are designed to handle a very wide variety of items, there are some items that can cause havoc and jams in the system, such as:

- Cylindrical duffle bags that tend to tumble and roll on conveyor inclines

- Wheeled bags with handles extended/telescoped, causing jams around conveyor turns or a diverter system

- Children’s car seats, either not in a tote or incorrectly positioned in a tote, these often jam in the entrance to the Explosive Detection System (EDS) equipment

- Bags with long, exposed carrying straps that get stuck in conveyor transitions

- Children’s strollers not placed in a tote, the highly irregular shape makes them difficult to transport and divert

- Large cuboidal boxes that can jam in the Explosive Detection System (EDS) equipment

- Backpacks or knapsacks that can tumble on inclines or have exposed straps

Either detecting these problematic bags at the ticket counter or before they go too far in the BHS can greatly improve system throughput and reliability.

To solve this application, hundreds of thousands of images were collected at an international airport and then categorized into over 30 different classifications. For this process, two color 3D cameras collected the images from different perspectives, allowing not only the collection of color bag images, but also bag dimensions and shapes. The annotated images were then fed into a Deep Learning platform to train an advanced neural network. After an initial round of training, the neural network was deployed on the BHS system where the solution was then tested on live bags and evaluated. Any detection errors were removed and a new neural network was trained again. This process was iterated until the results were satisfactory with several systems already in live operation at an international airport.

the Training Process

The result is a very robust baggage classification system that not only can detect non-conveyable items but also provide reporting on the numbers of bags seen in each classification which is useful for baggage handling system Key Performance Indicators (KPIs) and to give airport operators a much better understanding of what types of bags they are handling. The key benefit of this solution is that, as explained in the introduction, it learns with every image and can be widely adapted to the specific needs of various airports

Forms of AI are already in use in airports and are gaining acceptance in this market where automation is increasingly necessary to meet ever-increasing passenger loads. How will you use this tool to solve your vexing operational problems?

About the Author

Tom Gebler

Account Director

As Account Director for Airports at SICK, Inc., Tom Gebler has spent the past decade delivering smart, engineered solutions to streamline airport operations and baggage handling systems. With 15 years at SICK and a background in logistics and material handling, Tom blends deep industry knowledge with a practical approach to solving complex challenges in aviation infrastructure.

His earlier large account management roles in the courier, express and parcel sectors laid the groundwork for his success in driving innovation in airport technology. Today, Tom continues to shape the future of airport efficiency—one solution at a time.